The review bottleneck: when AI codes faster than you can read

In just a few months, AI coding assistants went from autocompleting lines to shipping entire features in minutes. Everyone who knows how to utilize AI can produce several times more code than before. However, AI is not perfect and we, as humans, still need to review the code it produces. Reviewing someone else’s code is not the most exciting part of the job - it’s just boring. With thousands of lines of code, it’s easy to unconsciously skim through them and miss the important parts. Let’s figure out how we can make the process less painful and time-consuming.

The review problem

When writing code yourself, you have a mental model of what the code does. Unfortunately, AI-generated code doesn’t give you that. A short summary of changes is all you get with a 500-line diff. To properly review it, you need to understand the context, verify correctness, and catch all the subtle bugs. Let’s face it - the same thing applies to your coworkers’ code, it’s not something new. The problem begins when you have so much code to review that you can’t keep up with it. Your review process becomes a bottleneck.

Don’t let AI repeat its mistakes

What’s worse, your AI assistant won’t learn from its mistakes unless you make it do so. Obviously, it’ll fix the issues you point out, but it’ll make them again in the future. AI agents always start with a blank page. Their only persisted context is your code and memory files (AGENTS.md/CLAUDE.md). If, during review, you notice that AI is not following specific patterns, code conventions, or just forgets to write tests, make sure to capture that. Add instructions to your memory files or use dedicated Skills to avoid repeating the same mistakes. You can also enforce rules by adding tools like linters to pre-commit hooks. The goal is to reduce the number of style-related mistakes to zero, so you can focus on the important parts - what the code actually does.

Understanding the context

One of the biggest pain points in understanding the code is the organization of changes. When reviewing a PR on GitHub, you’ll see all the changes grouped by file in alphabetical order. If it’s a small PR, that’s fine, but when changes are spread across dozens of files and hundreds of lines of code, you won’t be able to understand it quickly by going from top to bottom. You’re left jumping between files, trying to piece together the story. It takes a lot of time and effort to understand the big picture, and when you finally do, you still need to go through every file so you won’t miss anything.

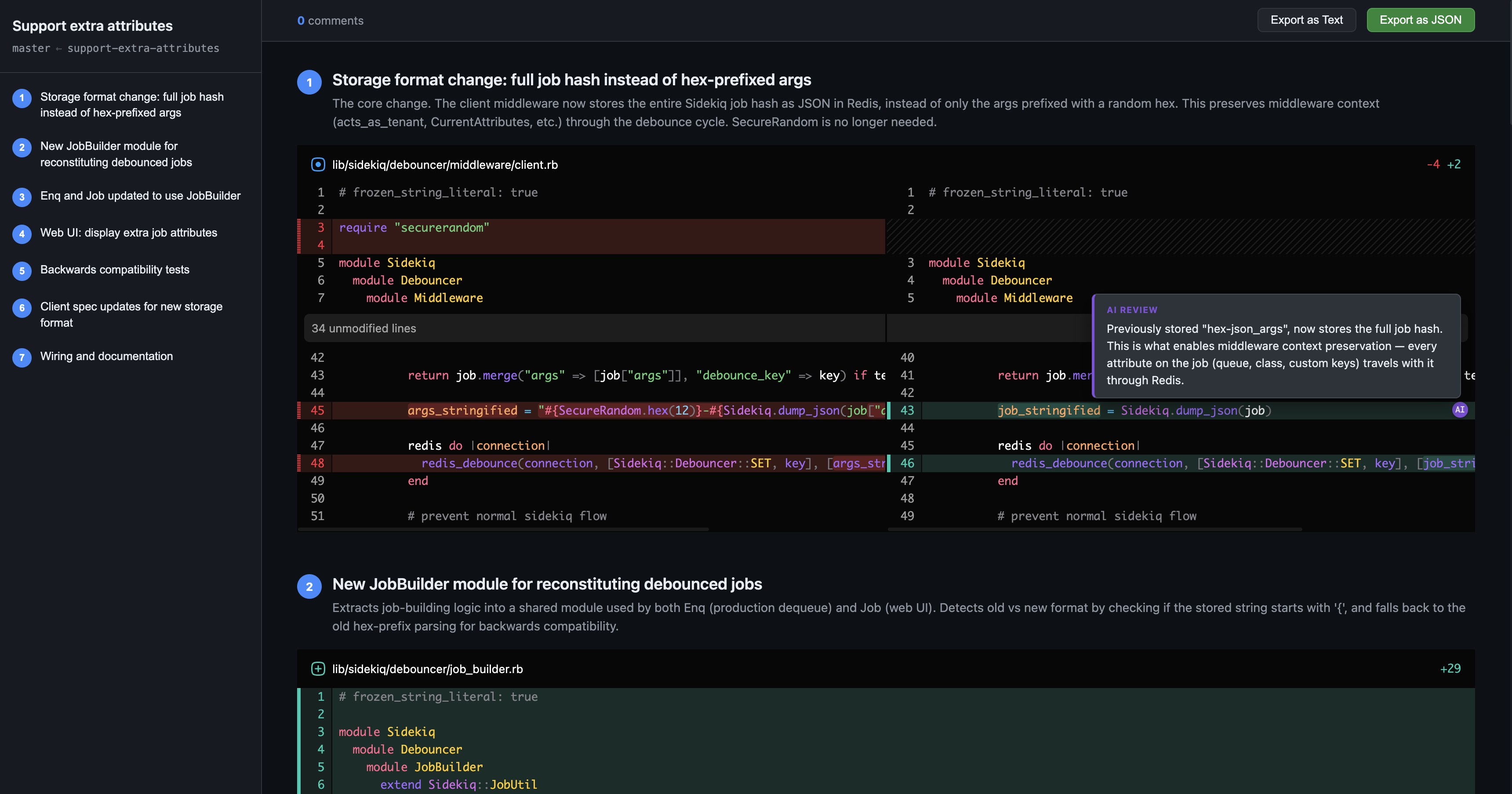

My approach: let AI organize the review

I’ve been experimenting with a different workflow. Since AI wrote the code, it might as well help me review it. The idea is to let the assistant organize the review process. It can split the diff into logical units and add meaningful annotations. Then I can review it in a more structured way. Annotations help me understand the context of the change, making the whole thing less overwhelming.

Now the fun part - how to actually do it?

I built a Claude Code skill just for that.

Here’s what it does:

- Splits the diff into blocks - individual hunks, each representing one continuous change region (multiple changes in one file can result in multiple blocks)

- Groups blocks by logical concern - not by file, but by what they actually do together. A “new API endpoint” section gathers the code in the route, controller, tests, and type definitions as one unit

- Adds inline annotations - short explanations of why something changed, not what changed (you can read the diff, right?)

- Generates an interactive HTML page - split-view diffs with syntax highlighting where you can click any line to leave comments, then export your feedback (UI included in the skill)

After reviewing, I export my comments and feed them back into the agent so it can fix the issues.

Feel free to try it out on your own code:

Install the skill:

npx skills add kukicola/skills/help-me-review

Use in the agent:

/help-me-review [PR or branch or commit]

If you work on public repositories, you can also try out Devin Review.

Don’t let it become AI slop

The developer role is shifting. Writing code is not the most time-consuming part of the job anymore. As software engineers, we need to adapt - utilize AI to deliver value faster, at every step of the process. Without proper orchestration and review, one may end up with so-called “AI slop” - huge technical debt, unmaintainable code, and security vulnerabilities.